What graphics API should I use? Is a question that many developers have asked themselves when starting a new project. The most obvious choice would be the better performing one, but when put to the test, we find out that they mostly perform the same (with some negligible variations). Then it should be the most compatible one, but what if the project is aimed for a single known platform? or what if a dev like the style of one API better? In short, the answer has always been It depends on what you plan to achieve.

However, with the arrival of the next generation of graphics APIs, I wanted to re-explore this question. The first thing I found out was that by reducing the driver overhead, it removed that black box feeling that came with the previous generation. And that by exposing what is really going on under the hood, we could see much more similarities between the APIs than before. I’ve concluded that this generation is much more suited for an abstraction layer that allows for one single renderer for multiple APIs.

Preparation:

The first step was to read as much content as I possibly could find on the subject, and look for some examples that could help me during the process. For Vulkan I used : vulkan-tutorial, raw-vulkan, ImGui’s example, and SaschaWillems’s repo. As for Dx3D12 I used : Microsoft’s samples, ImGui’s example, and shuhuai’s repo. The next step was to build a small demo of a triangle being displayed using a shader for both APIs, and benchmark it. Then I stripped all the rendering code from my previous engine down to the window rendering, and started drafting an architecture.

Architecture:

The goal was to keep the architecture as lightweight as possible, and figure out what concept should be made into classes to represent a fully scriptable pipeline. Here’s a very basic UML of the current state:

Initially, I had a Mesh and a Framebuffer class, but the Mesh class was moved to the core part of the project since it basically just wraps two buffers (VBO/IBO). And the Framebuffer class was merged with the RenderPass class since their role are very similar.

Scripting:

For the scripting, I’m using luabind-deboostified built with LuaJIT. This allows the use of class and inheritance, and the manipulation of C data using the FFI extension. The first step is to create a window object, this is done in the script client.lua. Here’s the minimal code:

--[[ - @file client.lua - @brief ]] class 'client' (orb.Window) function client:__init() orb.Window.__init(self, 1280, 720, "Engine", orb.Renderer.Vulkan) end instance = client()

A window is assumed to be a dynamic object, so the engine is taking care of calling the update function and providing the delta value between each frames. There’s two way a user can interact with the update call, either through connecting a signal to the window, or by overriding the update virtual. Both ways are shown here:

--[[ - @file client.lua - @brief ]] class 'client' (orb.Window) function client:__init() orb.Window.__init(self, 1280, 720, "Engine", orb.Renderer.Vulkan) -- 1) signal connection self.on_update:connect(function(dt) -- a lambda is used to inline print("UPDATE 1") end) end -- 2) virtual overring function client:__update(dt) print("UPDATE 2") -- call upper orb.Window.__update(self, dt) end instance = client()

Now to actually render something, a RenderPass object must be created first. In order to do so, the user must provide at least a shader, and preferably a camera. If a camera is not provided, the provided uniforms will be identity matrices. Once created, it’s possible to record Commands using the on_record signal.

--[[ - @file client.lua - @brief ]] class 'client' (orb.Window) function client:__init() orb.Window.__init(self, 1280, 720, "Engine", orb.Renderer.Vulkan) -- create camera. fov in radians, ratio, nearZ, farZ self.camera_ = orb.Camera(math.radians(80.0), w/h, 0.1, 15000.0) self.camera_.position = vec3(0, 0, -5) -- create a cube mesh self.cube_ = orb.Mesh.build_cube(self.renderer, 1.0) -- create the render pass local pass = self.renderer:create_render_pass({ shader = FileSystem:search("default.shader", true), camera = self.camera_, use_vertex_3d = true }) -- record a command drawing a cube pass.on_record:connect(function(cmd) self.cube_:draw(cmd) end) end function client:__update(dt) -- finally, call display here self:display() end instance = client()

This is the content of the shader:

{ "shaders" : [ { "type" : "vs", "data" : " cbuffer CamUBO : register(b0) { float4x4 projMatrix; float4x4 viewMatrix; float4x4 viewProjMatrix; }; struct VS_INPUT { float3 pos : POSITION; float2 tex : TEXCOORD; }; struct VS_OUTPUT { float4 pos : SV_POSITION; float2 tex : TEXCOORD; }; VS_OUTPUT main(VS_INPUT input) { VS_OUTPUT output; output.pos = mul(float4(input.pos, 1.0f), viewProjMatrix); output.tex = input.tex; return output; } " }, { "type" : "fs", "data" : " struct VS_OUTPUT { float4 pos : SV_POSITION; float2 tex : TEXCOORD; }; struct GBuffer { float4 albedo : SV_Target0; }; GBuffer main(VS_OUTPUT input) { GBuffer output; output.albedo = float4(1.0f, 0.0f, 0.0f, 1.0f); return output; } " }] }

This is the most simple scenario, the cube mesh is directly rendered to the swapchain back buffer. The only thing really worth noting here is the use_vertex_3d tag set to true, this is because the vertex input is currently defined in the render pass, and is assumed to be a 2d vertex (since you’ll be drawing 2d screen quads 90% of the time). Of course, this example is not enough to cover the more advanced techniques such as offscreen rendering, blending, uniform buffers, custom camera. (You can view the sky object here, the sky shader here, and the camera object here)

Shader Conversion:

The shader is first wrote in HLSL and saved in a json file organized per stages. Then I use XShaderCompiler to convert the shader into GLSL:

std::string HLSLToGLSL(const std::string& input, orb::Shader::Stage stage) { auto StageToTarget = std::map<orb::Shader::Stage, Xsc::ShaderTarget>() = { { orb::Shader::eUndefined , Xsc::ShaderTarget::Undefined }, { orb::Shader::eVertex , Xsc::ShaderTarget::VertexShader }, { orb::Shader::eTessControl , Xsc::ShaderTarget::TessellationControlShader }, { orb::Shader::eTessEvaluation , Xsc::ShaderTarget::TessellationEvaluationShader }, { orb::Shader::eGeometry , Xsc::ShaderTarget::GeometryShader }, { orb::Shader::eFragment , Xsc::ShaderTarget::FragmentShader }, { orb::Shader::eCompute , Xsc::ShaderTarget::ComputeShader } }; auto inputStream = std::make_shared(input); std::ostringstream outputStream; Xsc::ShaderInput inputDesc; inputDesc.sourceCode = inputStream; inputDesc.shaderVersion = Xsc::InputShaderVersion::HLSL5; inputDesc.shaderTarget = StageToTarget[stage]; inputDesc.entryPoint = "main"; Xsc::ShaderOutput outputDesc; outputDesc.sourceCode = &outputStream; outputDesc.shaderVersion = Xsc::OutputShaderVersion::GLSL450; outputDesc.options.autoBinding = true; outputDesc.options.optimize = true; std::string output = ""; try { if (Xsc::CompileShader(inputDesc, outputDesc)) { output = outputStream.str(); } } catch (const std::exception& e) { throw Exception(e.what()); } return output; }

And I convert the GLSL into SPIR-V binary using shaderc:

std::string GLSLToSPIRV(const std::string& input, orb::Shader::Stage stage) { auto StageToKind = std::map<orb::Shader::Stage, shaderc_shader_kind>() = { { orb::Shader::eUndefined , shaderc_glsl_infer_from_source }, { orb::Shader::eVertex , shaderc_glsl_vertex_shader }, { orb::Shader::eTessControl , shaderc_glsl_tess_control_shader }, { orb::Shader::eTessEvaluation , shaderc_glsl_tess_evaluation_shader }, { orb::Shader::eGeometry , shaderc_glsl_geometry_shader }, { orb::Shader::eFragment , shaderc_glsl_fragment_shader }, { orb::Shader::eCompute , shaderc_glsl_compute_shader } }; shaderc::CompileOptions options; options.SetOptimizationLevel(shaderc_optimization_level_size); options.SetSourceLanguage(shaderc_source_language_glsl); shaderc::Compiler compiler; auto res = compiler.CompileGlslToSpv(input, StageToKind[stage], "test", options); if (res.GetCompilationStatus() != shaderc_compilation_status_success) { throw Exception(res.GetErrorMessage()); } return std::string( reinterpret_cast<const char*>(res.cbegin()), reinterpret_cast<const char*>(res.cend()) ); }

This process is very slow in Debug (up to a second per shader) since both libraries are quite large. However in Release it takes around 50ms, which is much more acceptable. The size of the dependencies is also the reason why ShaderConverter is split into it’s own ~5mb dll.

Builds:

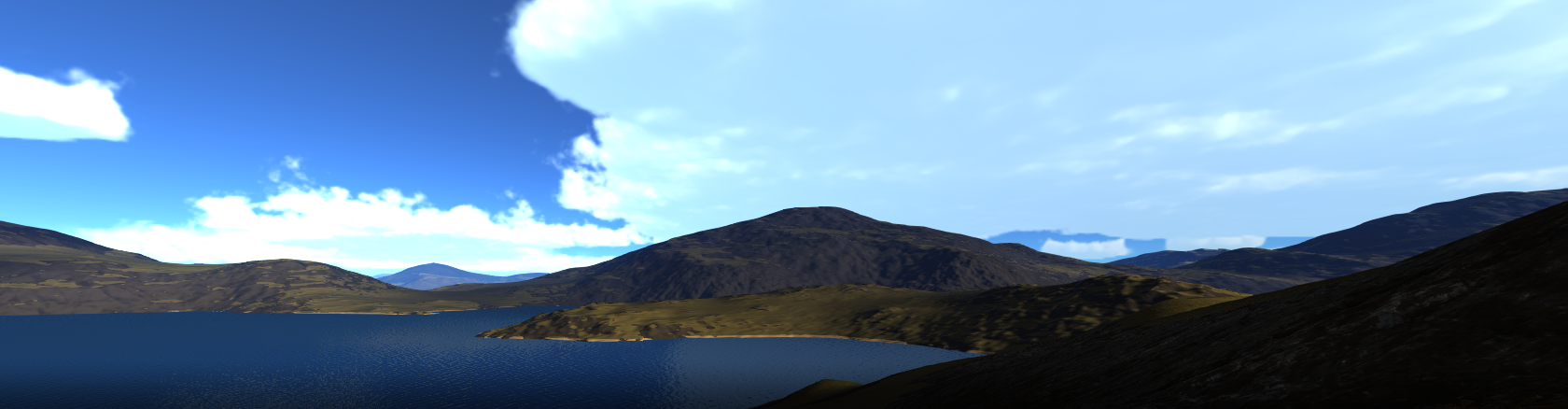

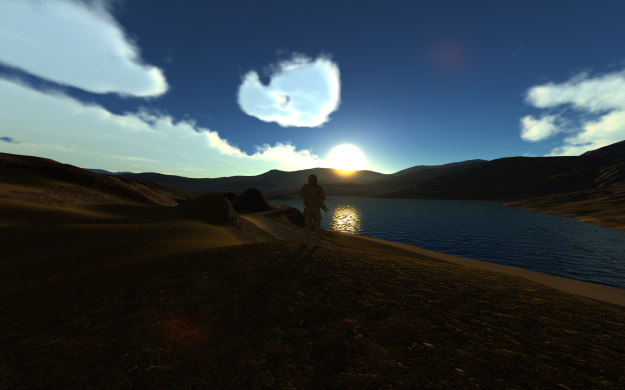

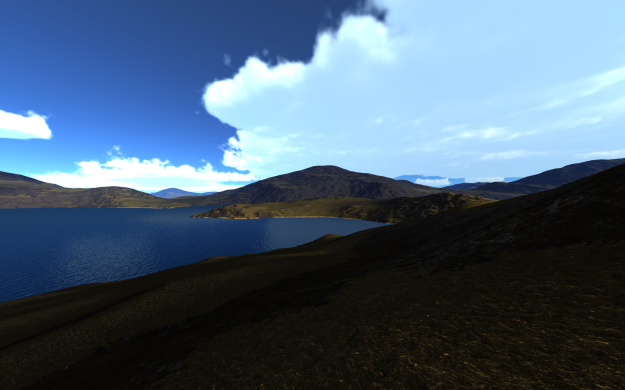

I’ve joined a build of the current state of the project displaying a light scattering pass blended with a GUI pass. Keep in mind that this requires Microsoft Visual C++ 2017 Redistributable, and has only been tested on Win10 with a NVIDIA 970 GTX. (Drag into folder and run Sky.exe. The default renderer is Vulkan, but you can change it to Dx3D12 in the client.lua file)

Conclusion:

I think it’s fair to say that there’s a steep learning curve for both APIs, you’ll be writing much more code to get things working the first time. But when everything is properly abstracted away, that’s when they really start to shine. I think both Khronos and Microsoft did a very solid job with this generation, and hopefully more devs will take the leap.